Artificial intelligence has evolved over the years. From driverless automobiles to voice automation in households, AI is no longer a term used in sci-fi books and movies. The future of AI is coming sooner than the projections. Artificial Intelligence (AI) is emerging as an integral part of our lives, thus transcending human analytical abilities.

However, the discussions about algorithmic bias are bringing to light the loopholes in the perfectly perceived AI systems. The lack of fairness resulting from the performance of the system is giving rise to algorithmic bias. This lack of justice in algorithmic bias comes in different ways but can be interpreted as prejudice based on a particular distinction.

Over the years, diversity and inclusion have emerged as a critically significant issue, with more focus being employed to ensure the success of work cultures and organizations around the world. Companies are struggling to mitigate and reduce biases of all kinds in their operations while attempting to promote diversity and inclusion in their ecosystems.

The critical question here is: What is the root cause of bias in AI systems, and how can it be prevented?

In numerous forms, biases are infiltrating the algorithms. Variables such as gender, ethnicity, or identity are excluded, thus forcing AI systems to learn and make decisions based on training data that may contain skewed human decisions or represent historical inequities.

There is ample proof of the discriminatory harm caused by AI tools. After all, AI is built by humans, and its deployment in the systems and institutions has been marked by entrenched discrimination. From criminal legal systems to housing, workplace, and financial systems, bias is often baked into the outcomes based on the data used to train the AI.

However, data that is discriminatory or unrepresentative for people of color, gender, or other marginalized groups, can sprout biases in the AI's design framework. The tech industry's lack of representation of people who can work towards addressing these potential harms in technology can only exacerbate this problem.

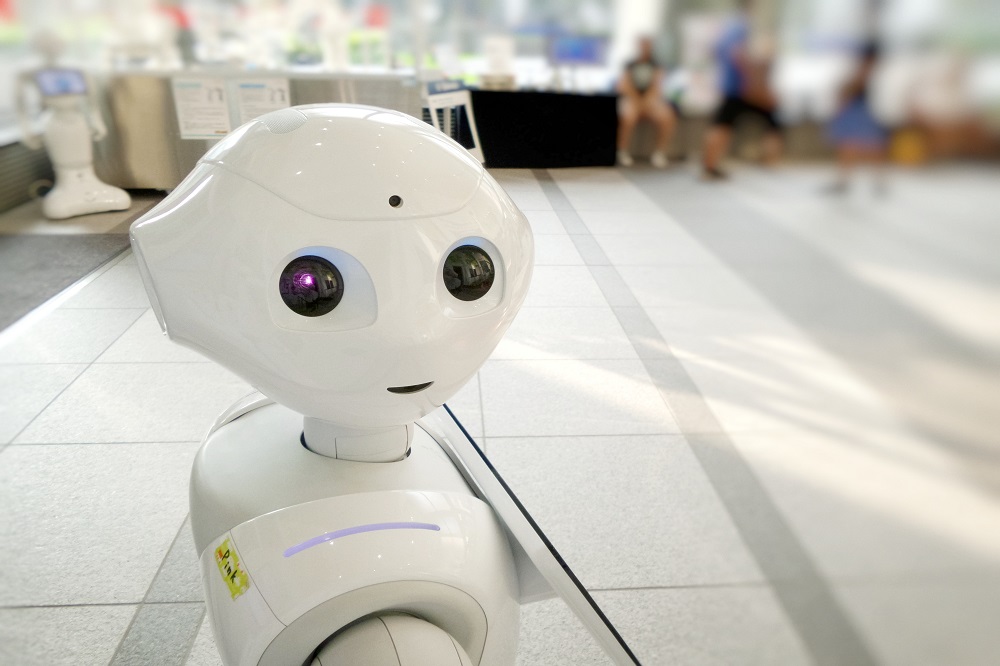

Read more: Humans & Robots Coworking; Rise in Automation; Is this the Smart Future of Work?

What is AI Bias?

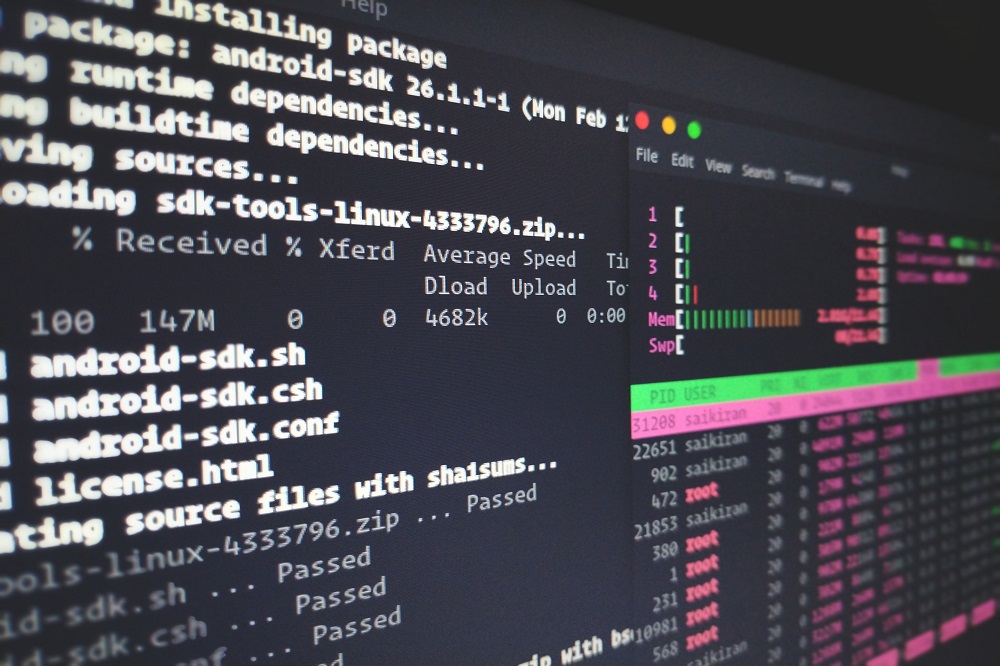

AI bias is a peculiarity in the output of machine learning algorithms due to prejudiced assumptions made during the development process of the algorithm or prejudices in the historical data.

An AI framework can be as good as the quality of input data. If the training dataset is clean from conscious and unconscious assumptions, organizations can build AI systems that can make unbiased data-driven decisions. The AI biases in the system can be categorized as:

- Cognitive biases: These are unconscious errors that affect judgments and decisions. These biases arise from the attempt to simplify processing information. Cognitive biases could seep into machine learning or AI system algorithms through the designers unknowingly.

- Biases due to lack of complete data: If the data set or historical data is incomplete, it may lead to the formation of bias.

The cause of the alarm is that decision-makers do not have a significant way to see biased practices that are encoded into their systems. Biased predictions lead to biased behaviors. Due to this reason, there is an urgent necessity to understand what biases are lurking within the source data.

Today, systems are continually building on biased actions originating from biased sources. This is creating a cycle that is building upon itself, giving rise to a problem that compounds over time with every prediction. There is a need to install human-managed governors to protect actions resulting from machine learning predictions.

The earlier the biases are detected and eliminated, the faster the risks can be mitigated. Organizations that are not addressing the bias now are exposing themselves to a myriad of unknown future risks, penalties, and lost revenue.

Read more: Top Ethical Challenges in AI – The Price of Progress

Real-world Cost of AI Bias

Machine learning is widely applied across a variety of applications that are impacting the public. Historical data is being relied on, leading to a rise of plagued biases, and reliance on biased data in machine learning models perpetuates the bias. However, creating an understanding of potential bias is the first step toward correcting it.

Example of AI bias: Amazon's Biased Recruiting Tool

Amazon's AI project in 2014 was started with the dream of automating the recruiting process. The project was based on reviewing job applicants' resumes and rating applicants by employing AI-powered algorithms. This process enabled recruiters to not spend their time on manual resume screen tasks. However, by 2015, Amazon realized that the recruiting system was not rating candidates fairly as it showed bias against women.

Amazon was using historical data from the last 10-years to train their AI system. The historical data that was being employed contained biases against women as there was male dominance across the tech industry.

This led to 60% of men being employed by Amazon through the recruitment system. Amazon's recruiting system incorrectly comprehended that male candidate is preferable for the job openings. The system discarded resumes that included the word or phrase "women." This led to Amazon scrapping its AI recruitment system for recruiting purposes.

Read more: Top AI stocks to watch out for in 2022

Underlying Data is often the Source of Bias

Underlying data rather than the algorithm is most often the main cause of the issue. AI systems are trained on data possessing human decisions or on data that reflect effects of societal or historical inequities.

Bias can be introduced in the data through the sources from which it was collected or selected for use. Data generated by users can lead to the formation of a feedback loop that can create bias.

How to Fix Biases in AI Algorithms?

It is important to acknowledge that AI biases can happen only due to the prejudices of humankind. Hence businesses need to focus on removing these prejudices from the historical data set. However, this process is not as easy as it seems.

A naive approach is by removing protected classes from data and deleting labels that lead to the algorithm making biased decisions. Yet, this approach might not work as removing labels can affect the understanding of the system resulting in reducing accuracy. To minimize the AI bias, organizations need to perform tests on historical data and algorithms and apply other best practices.

Overcoming AI Bias

What is the solution to overcome the AI bias?

The simplest way is by putting people at the helm of deciding when to or when not to take real-world actions based on machine learning prediction.

Transparency is critical for allowing people to understand AI and how technology takes certain decisions and makes predictions. By developing the reasoning and uncovering the factors impacting ML predictions, algorithmic biases can be brought to the surface, and decisions can be adjusted to avoid costly penalties or feedback. Businesses now need to focus on explainability and transparency within their AI systems.

Growing regulation and guidance from policymakers to mitigate biased AI practices can help in cutting done on the radical bias.

By producing more precise guidance on artificial intelligence, organizations can work towards building ethically safe, sustainable, and algorithmic decision-making AI systems.

To maximize righteousness and minimize bias from AI, the following paths need to be considered:

- Being mindful of the contexts in which AI can help correct the bias, as well as areas where there is a high risk of AI exacerbating bias.

- Establishing processes and practices to test and mitigate the AI system bias.

- Engaging in fact-based conversations about potential biases.

- Exploring ways for humans and machines to work together.

- Investing in biased research by making more data available for research and adopting a multidisciplinary approach.

- Subsidizing more in diversifying the AI field.

Key Takeaways

- Algorithms do what they are fed and taught. Unfortunately, some are taught prejudices and unethical biases that are derived from societal patterns hidden in the existing historical data,

- To build responsible algorithms that generate ethical outputs, it is important to pay close attention to potential unintended discrimination arising out of harmful consequences.

- The key to building unbiased AI systems is by setting guidelines that enable organizations to implement AI with confidence and trust.

In Conclusion

AI was built to make people's lives more comfortable, productive, and efficient. Currently, the lack of diversity allows for just a one-sided view behind AI algorithms, thereby impacting people's daily lives and enabling them to make all kinds of decisions. To unlock the full potential of automation and create unbiased change, humans need to understand how and why AI bias leads to specific outcomes and how to drive the change.

Are AI's decisions less biased than humans? Will AI bias make these problems worse?

In seeking the explanation for AI bias in general, it is important to determine the global societal complexities and the fundamental transition that are emerging at the social level.

With offices in New York, San Francisco, Austin, Seattle, Toronto, London, Zurich, Pune, and Hyderabad, SG Analytics, a pioneer in Research and Analytics, offers tailor-made services to enterprises worldwide.

A leader in RPA Consulting, SG Analytics helps organizations embrace workforce transformation through digital aids. Contact us today if you are looking to drive critical data-driven decisions that facilitate accelerated growth and performance.